Thinking About Channel Changes and More

The smarter way to stay on top of the multichannel video marketplace. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

Maybe it's the onslaught of Comcast X1 ads for voice-activated remote controls. Perhaps it's the success of this week's hit movie "The Quiet Zone" about a world where people must remain as silent as possible, communicating non-verbally. Possibly it's the general malaise about thought control. Or maybe it's because I've seen so many prototypes of "telepathy" projects that convert brainwaves into actions.

Whatever the reason, this week's announcement from the Massachusetts Institute of Technology Medi Lab about "Alter Ego," a "wearable, personal silent speech interface," seemed well-timed.

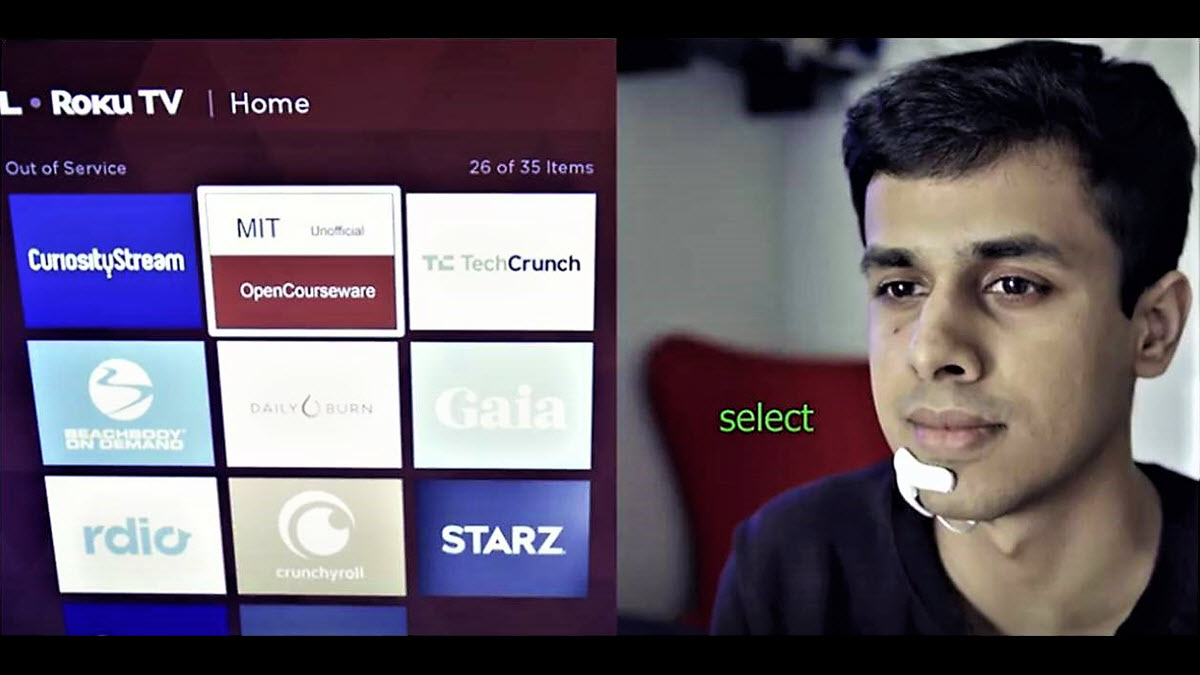

This short video demonstrates how it could be used, and includes an example of cross-platform video navigation. When the viewer decides which channel to watch, he thinks (actually sub-vocalizes) the word "Select," and that video feed appears on the screen. The developers call it an "intelligence-augmentation (IA) device” and foresee applications in Internet of Things and other human-machine situations.

Brain-controlled devices have been around for years, and not just in science fiction. At trade shows, I've tried on funny-looking devices, such as the neuroheadset from Emotiv, a San Francisco bio-informatics and technology company. In a related vein, at an NCTA convention a few years ago, Affectiva, an emotion-measurement technology firm (that also grew out of the MIT Media Lab) demonstrated its technology to recognize human emotions via physiological responses. Although it relied solely on brainwaves, the system was promoted for its value in gauging viewers' responses to what they were watching - sort of emotional ratings.

Back to Alter Ego: The 11-page technical paper describing it, delivered recently at the 23rd International Conference on Intelligent User Interfaces, explains how a viewer's thoughts "using natural language, without discernible muscle movements and without any voice" can communicate into a computing devices, such as a video monitor.

The project, from the MIT Media Lab's "Fluid Interfaces" group, involves a device which recognizes words as you silently speak them. The paper's authors, Arnav Kapur, Shreyas Kapur and Pattie Maes, explain how the aural output (bone conduction headphones) allows you to conduct a bi-directional conversation with a computing device. They describe how the system captures neuromuscular signals from the surface of the user's skin; hence unlike other proposed brain/computer interfaces (BCIs), it does not have access to private information or thoughts. They said that in their current research, the system has a 92% median word accuracy level.

In addition to controlling a TV set and other potential entertainment devices, MIT's video shows a student shopping in a store (adding up the prices on a shopping list), playing games (video and board games) and interacting with other humans on campus. The authors explains that silent speech interfaces with telecommunications devices, speech-based smart assistants and social robots eliminates the stigma - i.e., weirdness - of talking aloud to a machine or allowing others to overhear what you're saying.

The smarter way to stay on top of the multichannel video marketplace. Sign up below.

The video also shows that the current version of the "headset" involves a large - let's admit it: dorky - contraption that fits over the ear and runs down the cheek and onto the chin. Peripheral devices such as lapel cameras and smart-glasses could directly communicate with the device and provide contextual information.

Over the decades, I've been on the MIT campus and seen students (like the one in this video) walking around wearing or using futuristic gear (predecessors of Google glass and wireless communicators). Although first attempts don't always lead to actual, mass products, Alter Ego exemplifies the early-stage thought process for evolving technologies.

As the authors explain in their paper, "We seek to ... couple human and machine intelligence in a complementary symbiosis."

"As smart machines work in close unison with humans, through such platforms, we anticipate the progress in machine intelligence research to complement intelligence augmentation efforts," they conclude, "which would lead to an eventual convergence - to augment humans in wide variety of everyday tasks, ranging from computations to creativity to leisure."

For system developers, their final message is particularly relevant: "We envision that the usage of our device will interweave human and machine intelligence to enable a more natural human-machine symbiosis that extends and augments human intelligence and capability in everyday lives."

That's worth thinking about for a minute - preferably sub-vocally.

Contributor Gary Arlen is known for his insights into the convergence of media, telecom, content and technology. Gary was founder/editor/publisher of Interactivity Report, TeleServices Report and other influential newsletters; he was the longtime “curmudgeon” columnist for Multichannel News as well as a regular contributor to AdMap, Washington Technology and Telecommunications Reports. He writes regularly about trends and media/marketing for the Consumer Technology Association's i3 magazine plus several blogs. Gary has taught media-focused courses on the adjunct faculties at George Mason University and American University and has guest-lectured at MIT, Harvard, UCLA, University of Southern California and Northwestern University and at countless media, marketing and technology industry events. As President of Arlen Communications LLC, he has provided analyses about the development of applications and services for entertainment, marketing and e-commerce.