Why Generative AI Poses Challenges for Content Creators

Prompt engineering efforts should keep context, empathy in mind

The smarter way to stay on top of broadcasting and cable industry. Sign up below

You are now subscribed

Your newsletter sign-up was successful

The democratization of generative AI has brought about a transformative shift in society, empowering individuals to actively participate in communication and content creation. This shift is exemplified by platforms like ChatGPT and the rise of discursive communication, which have significantly influenced interactive and creative experiences. AI chatbots provide accessible support by offering valuable information, resources and emotional assistance, particularly in situations where human interaction may be challenging. The ability to express oneself to a nonjudgmental AI and the convenience and anonymity of digital communication are highly valued advantages.

However, it is important to recognize the limitations and risks associated with relying solely on AI agents, especially in sensitive and vulnerable situations. While chatbots can provide valuable assistance, they may lack the nuanced understanding, empathy and contextual comprehension that human interactions offer. Authentic human connection and support often prove essential during challenging times, areas where conversational AI may fall short.

Furthermore, the quality and accuracy of information provided by AI agents can vary based on their training data and algorithms. It is crucial to acknowledge that the dissemination of misinformation or biased responses can have serious consequences, particularly when individuals place their trust and reliance on the information provided.

AI chatbots are commonly perceived as objective sources of information, but it is important to be mindful of the influence of “prompt engineering.” When prompts are intentionally designed to promote specific viewpoints or agendas, users may unknowingly internalize and accept those perspectives as objective and unbiased. As a result, a deceptive illusion of objectivity emerges, significantly shaping user perceptions.

AI Interactions Pose Risks

The tragic incident involving Pierre, a Belgian man who died by suicide after prolonged interactions with an AI chatbot, serves as a poignant reminder of the risks and consequences associated with human engagement with AI beings. This incident highlights the potential introduction of unintended biases or manipulation into interactions between humans and AI models. Users, through the injection of specific prompts, may unknowingly perpetuate skewed perspectives or reinforce existing biases present in the training data. These outcomes can further exacerbate societal inequities, foster polarizing viewpoints, and, in extreme cases, even provide fatal advice.

To gain a comprehensive understanding of the implications of AI chatbots, which may become the primary avenue for communication and content creation, one should carefully consider their broader impact on individuals and society as a whole.

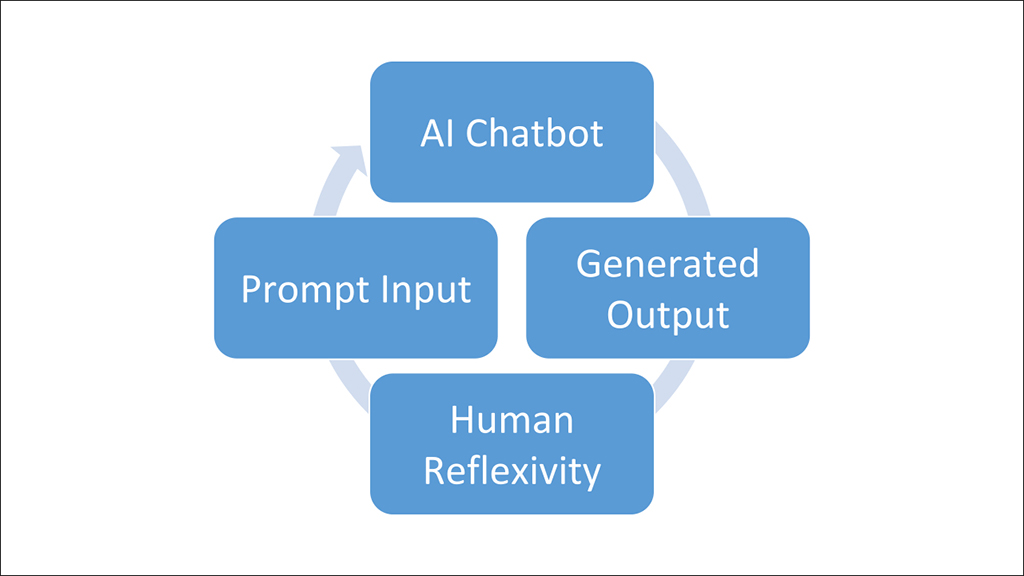

In the realm of second-order cybernetics, valuable insights are derived regarding the role of the observer in shaping the observed system. Second-order cybernetics emphasizes the concept of reflexivity, which involves the ability to observe and modify one’s own observations and actions. This concept aligns with the high standards of journalism. Prompt engineering, operating within this framework, establishes a feedback loop that incorporates the prompt, the generated output, and its evaluation. Through this iterative process, prompts are adjusted based on the generated results and the user's self-reflection, aiming to achieve desired outcomes and enhance the effectiveness of the generative AI model in producing tailored and high-quality content.

The smarter way to stay on top of broadcasting and cable industry. Sign up below

Prompt engineering is inherently subjective, influenced by the biases, intentions, and objectives of different “prompt engineers.” This subjectivity highlights the observer-dependent nature of prompt engineering, where the designed prompts can subtly promote specific agendas or biases, potentially reinforcing a distorted understanding of information and viewpoints.

To maintain a balanced and ethical approach, users of chatbots should be self-aware of their own biases and strive for fairness, inclusivity and responsible use of AI technology in their prompt engineering practices. This requires an ongoing examination of the prompts used to ensure that unintentional biases are not introduced or perpetuated.

Furthermore, the principles of second-order cybernetics highlight the interconnectedness and co-creation between observers (content creators) and systems (generative AI models). Prompt engineering can be viewed as a collaborative process where content creators provide prompts and evaluate the generated output, enabling them to learn from the model's responses, adapt their prompts accordingly, and refine their understanding of the system's capabilities and limitations. This iterative approach allows for a more informed and mindful prompt engineering process.

Ethical Concerns for Content Creators

This perspective raises important ethical questions about the role and responsibilities of observers in shaping AI systems. Prompt engineering carries ethical implications, as the choice of prompts can significantly influence the biases, fairness and quality of the AI model’s output. Content creators bear the responsibility of being mindful of these ethical considerations, striving to mitigate biases, promote inclusivity and ensure responsible use of AI technology in their prompt engineering practices.

In the context of citizen journalism, the significance of journalistic practices in prompt engineering becomes evident. Human oversight and critical thinking are indispensable. Journalists must exercise professional judgment, independently verify information and uphold ethical standards throughout their work. While generative AI models can serve as valuable tools to support and enhance journalistic endeavors, the ultimate responsibility for ensuring accuracy, fairness, and journalistic integrity lies with human journalists.

In conclusion, while AI chatbots offer valuable support and enhance communication experiences, users must be aware of the limitations and risks associated with relying solely on AI agents. Prompt engineering practices should be guided by self-awareness, fairness, inclusivity and responsible use of AI technology. The utilization of second-order cybernetics and adherence to journalistic practices can contribute to mitigating biases and ensuring ethical outcomes. By embracing a balanced approach that combines the strengths of AI chatbots with human involvement, we can navigate the evolving landscape of AI technology and foster a future where AI benefits society in a responsible and inclusive manner.

Ling Ling Sun is chief technology officer at Nebraska Public Media.