Citing Potential Human Extinction, Senators Seek Artificial Intelligence Inquiry

See possible direct harm to vulnerable populations

The smarter way to stay on top of broadcasting and cable industry. Sign up below

You are now subscribed

Your newsletter sign-up was successful

A pair of Democratic senators have called on the Government Accountability Office to investigate the potential harms of generative artificial intelligence (AI), algorithms that can create everything from videos and music to software code and product design.

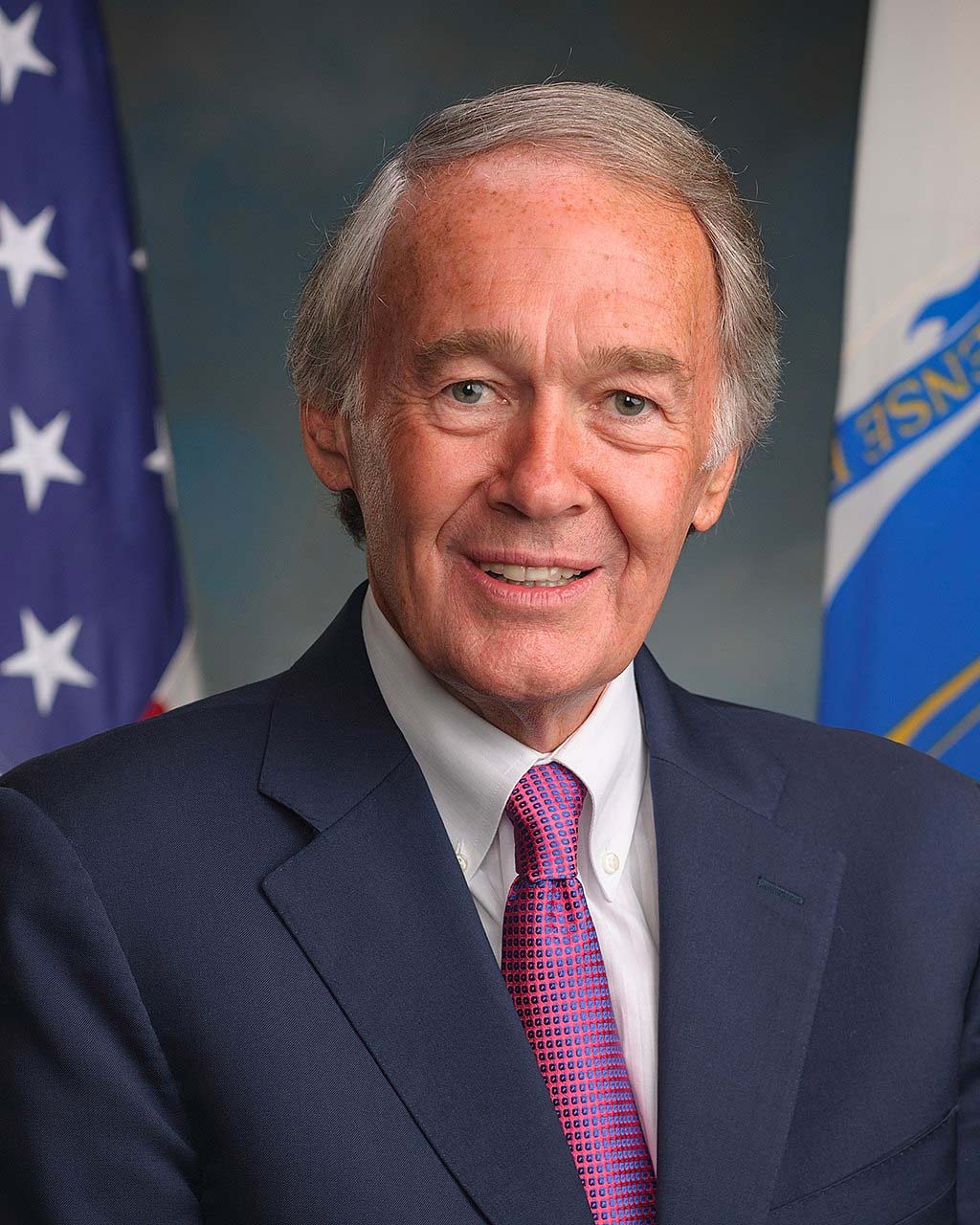

In a letter to U.S. Comptroller General Gene Dodaro, Sens. Ed Markey (D-Mass.) and Gary Peters (D-Mich.), the latter the chair of the Senate Homeland Security and Government Affairs Committee, said a detailed technology assessment was called for given the potential for that technology to “directly harm vulnerable communities or risk widespread injury, death or human extinction.”

While AI has many potential benefits, they said, it is already demonstrating “significant harms.”

One issue they have is with “the potential to ‘jailbreak’ generative AI models and circumvent developer controls.”

Facebook has faced the ire of Capitol Hill over that issue. Earlier this month, Sens. Richard Blumenthal (D-Conn.) and Josh Hawley (R-Mo.), chair and ranking member of the Senate Judiciary Tech and Privacy Subcommittee, respectively, wrote Facebook wanting some answers from parent company Meta on why details of its “Large Language Model Meta AI (LLaMA)” program were leaked.

Markey and Peters also have some questions, saying the GAO investigation should look to answer the following:

- “What influence do commercial pressures, including the need to rapidly deploy products, have on the time allocated to pre-deployment testing of commercial models?”

- “What security measures do AI developers take to avoid their trained models being stolen by cyberattackers?”

- “How do generative AI models rely on human workers for the process of data labelling and removing potentially harmful outputs?”

- “What is known about potential harms of generative AI to various vulnerable populations, (for example, children, teens, those with mental health conditions, and those vulnerable to scams and fraud) and how are providers monitoring and mitigating such harms?”

- “What are the current, and potential future environmental impacts, of large-scale generative AI deployment? This can include the impacts on energy consumption and grid stability, as well as the generation of e-waste, associated with the large-scale data-centers required to run AI models.”

- “What is known about the potential risks from increasingly powerful AI that could lead to injury, death, or other outcomes — up to human extinction — and how can such risks be addressed?”

The smarter way to stay on top of broadcasting and cable industry. Sign up below

Contributing editor John Eggerton has been an editor and/or writer on media regulation, legislation and policy for over four decades, including covering the FCC, FTC, Congress, the major media trade associations, and the federal courts. In addition to Multichannel News and Broadcasting + Cable, his work has appeared in Radio World, TV Technology, TV Fax, This Week in Consumer Electronics, Variety and the Encyclopedia Britannica.