Dark Horizon for the Cloud

The smarter way to stay on top of broadcasting and cable industry. Sign up below

You are now subscribed

Your newsletter sign-up was successful

Back in 2013, the Entertainment Technology Center at the University of Southern California (ETC@USC)—a Hollywood technology think tank/nonprofit operating within the university’s School of Cinematic Arts—launched a unique “production in the cloud” project.

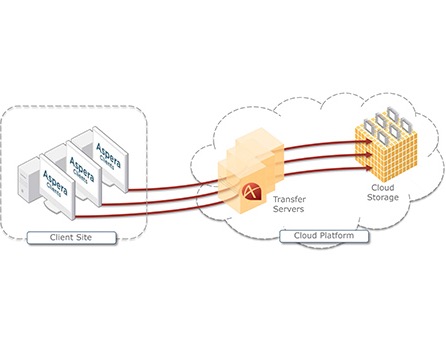

The program’s goal was simple: bring together the major studios and companies specializing in cloud technologies, putting both on the same page going forward, to help facilitate cloud-based content creation, production and distribution endeavors. Since its launch, ETC@USC has brought cloud content heavyweights such as Google and IBM’s Aspera on board to weigh in. It has also championed the Cinema Content Creation Cloud (C4) open-source framework for media production, which offers up a unique digital ID for content files.

Yet Erik Weaver, project manager for ETC@USC’s cloud program since it launched, looks at the state of content offered in the cloud today, and sees a real problem: the cloud-centric technology companies may not be ready to carry and relay all the extra digital weight associated with 4K video, high-dynamic range (HDR), wide color gamut and the added, advanced audio codecs included with the studio and broadcast content waiting in the pipeline.

“We’re really, absolutely on the edge of a massive smackdown of data, and things are about to explode,” Weaver said. “It’s a massive onslaught of data coming down the pipe.”

Darcy Antonellis, the former chief technology officer of Warner Bros., and current CEO of multiplatform video services company Vubiquity, broached exactly this concern during the recent NAB Show in Las Vegas. She pointed to the size of the cloud-based files associated with flmmaker Peter Jackson’s The Hobbit: An Unexpected Journey (released in 2012)—as many as 20 petabytes of data were being moved via the studio’s cloud services, she said, a massive amount of content for any one film at the time.

“You got into high frame rates, 4K…and HDR, and visual effects, and you start to add all of that up,” she said. And when it comes down to delivering—and accessing—that content via the cloud: “It comes down to price and availability,” she said.

Google, Akamai, IBM, Amazon Web Services, Harmonic, Verizon and more made a major push with their cloud-based delivery solutions at the April NAB Show. And according to a late-April report from Cisco Systems, it’s no surprise why: The tech company’s “Global Cloud Index” report speculates that by 2019, more than 85% of online content workloads will be handled by cloud data centers.

The smarter way to stay on top of broadcasting and cable industry. Sign up below

“In just the past year, a variety of businesses and organizations have reported their plans for cloud migration or adoption,” the Cisco report stated. “For example, Netflix announced plans to shut down the last of its traditional data centers [in] 2015, a step that [makes] it one of the first big companies to run all of its IT in the public cloud.”

Still—even though her company has been offering cloud-based solutions for 4K delivery for more than five years—Michelle Munson, president, CEO and cofounder of IBM’s Aspera, said she understands the frustrations associated with today’s cloud content company delivery needs.

“Production needs cloud infrastructure [that] often isn’t ready or even existent, and the biggest change of all is that you can get access to the IT [needed] to support it, from wherever you happen to be,” Munson said. “More broadband is available today, and that’s the huge driver for all these cloud service offerings. And the intent to create some centralization, whether it’s a public or private cloud environment, is [occurring].”