Senators Push Kids' Online Safety Bill

Legislation targets social media for issues including bullying, substance abuse and suicide

The smarter way to stay on top of the multichannel video marketplace. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

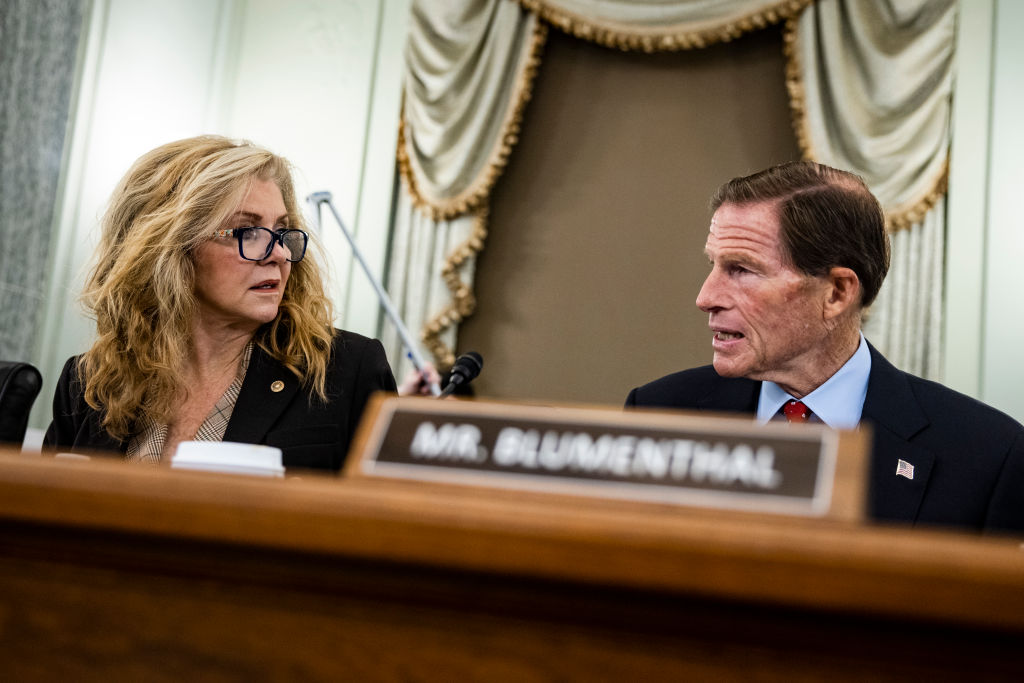

A bipartisan Senate duo looked this week to put a spotlight on legislation, the Kids Online Safety Act (KOSA), that would crack down on Big Tech. They are trying to get the bill passed in the waning, lame-duck days of the current session of Congress.

Sens. Richard Blumenthal (D-Conn.) and Marsha Blackburn (R-Tenn.), chair and ranking member, respectively, of the Senate Commerce Subcommittee on Consumer Protection, Product Safety and Data Security, met this week with young people and parents of young people they said had “died or were harmed” due to social media.

For example, one of the people they met with was the mother of a girl who died of fentanyl poisoning “from drugs she and a friend purchased from a dealer they used Facebook to find,” Blumenthal’s office said. He is co-sponsor of the bill.

Also: Kids Online Protection Bill Introduced

S. 3663, the Kids Online Safety Act, passed unanimously in the Senate Commerce Committee last July. It would attempt to protect children's online mental health, including addressing issues like body image, eating disorders, substance abuse, and suicide.

Blumenthal and Blackburn led hearings on issues surrounding social media and its impact on children.

The result was the bill, which:

The smarter way to stay on top of the multichannel video marketplace. Sign up below.

- “Requires that social media platforms provide minors with options to protect their information, disable addictive product features, and opt out of algorithmic recommendations. Platforms would be required to enable the strongest settings by default.

- “Gives parents new controls to help support their children and identify harmful behaviors, and provides parents and children with a dedicated channel to report harms to kids to the platform.

- “Creates a responsibility for social media platforms to prevent and mitigate harms to minors, such as promotion of self-harm, suicide, eating disorders, substance abuse, sexual exploitation, and unlawful products for minors (e.g. gambling and alcohol).

- “Requires social media platforms to perform an annual independent audit that assesses the risks to minors, their compliance with this legislation, and whether the platform is taking meaningful steps to prevent those harms.

- “Provides academic and public interest organizations with access to critical datasets from social media platforms to foster research regarding harms to the safety and well-being of minors.”

Also: Blumenthal Says Facebook Weaponizes Childhood Suffering

“The [legislation] will hold social media companies accountable and establish a duty of care for protecting children online,” Parents Television & Media Council president Tim Winter said in July. ▪️

Contributing editor John Eggerton has been an editor and/or writer on media regulation, legislation and policy for over four decades, including covering the FCC, FTC, Congress, the major media trade associations, and the federal courts. In addition to Multichannel News and Broadcasting + Cable, his work has appeared in Radio World, TV Technology, TV Fax, This Week in Consumer Electronics, Variety and the Encyclopedia Britannica.